Today’s Patent – Dialogue Generation Method Based on Transformer Architecture

The said invention (CN111274362B) was invented by Cai Xiantao Yuan Yiming by USPTO on 01-02-2020. Currently, it stands assigned to Wuhan University WHU.

Current dialogue generation models rely on Transformer-based architectures such as GPT and T5, which use deep learning techniques to process and generate conversational responses. Traditional methods include sequence-to-sequence (Seq2Seq) models, which often produce generic or inconsistent replies due to a lack of structured knowledge integration.

Two primary strategies exist for using non-structured knowledge in open-domain dialogue systems: one treats the process as a reading comprehension task, selecting a knowledge segment as the response but restricting it to existing text, making replies unnatural. The other approach considers it a text generation task, allowing flexibility but often leading to responses that mix relevant and irrelevant knowledge without proper selection.

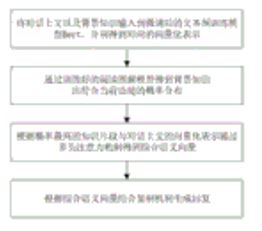

This invention improves dialogue generation by combining knowledge selection with a copy mechanism, ensuring more natural and contextually relevant responses. First, it selects knowledge segments most relevant to the ongoing conversation, preventing irrelevant information from influencing the generated text. Then, by employing a copy mechanism, the model seamlessly integrates relevant knowledge into replies, maintaining coherence and factual accuracy. This approach enhances response quality, making AI conversations more informative and human-like.

+1 888 890 6411

+1 888 890 6411